生成 TPCH 数据并导入到 Hive

Hive SQL迁移 Spark SQL 在网易传媒的实践

如何彻底解决 Hive 小文件问题

Hive SQL 迁移 Spark SQL 在滴滴的实践

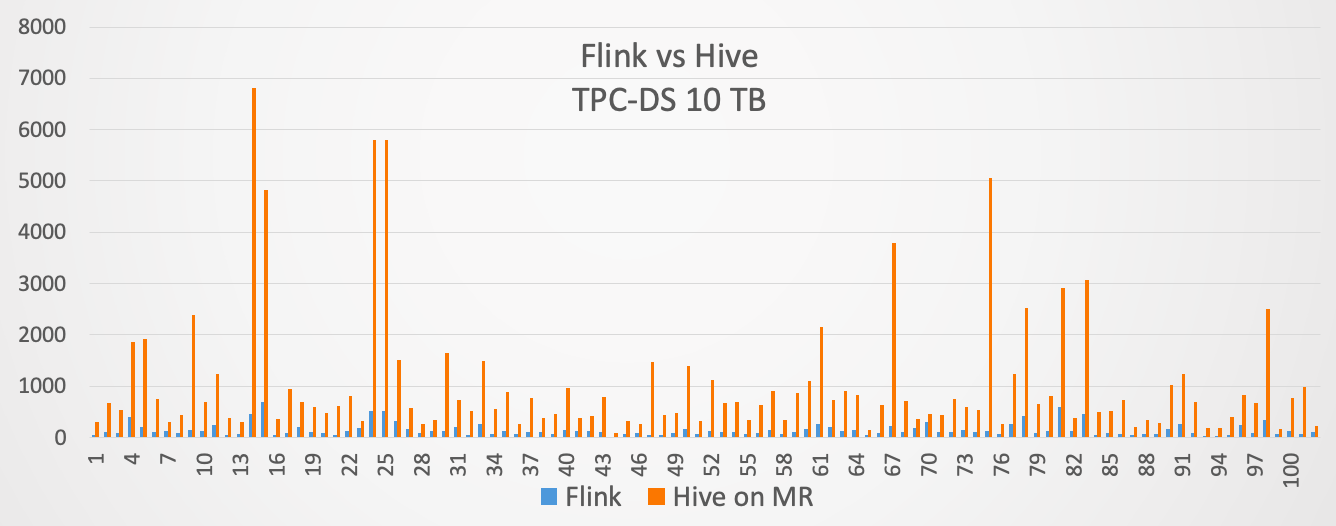

Flink 1.11 与 Hive 批流一体数仓实践

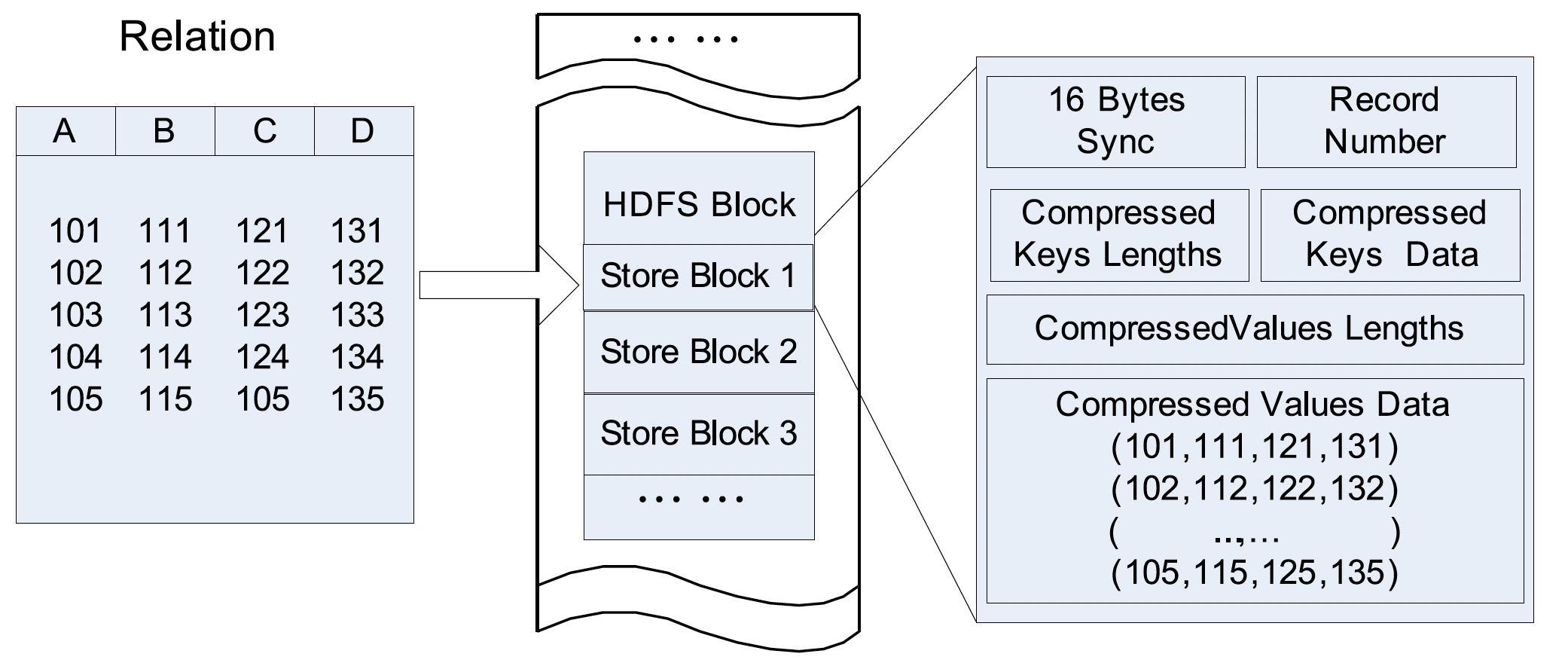

从行存储到 RCFile,Facebook 为什么要设计出 RCFile?

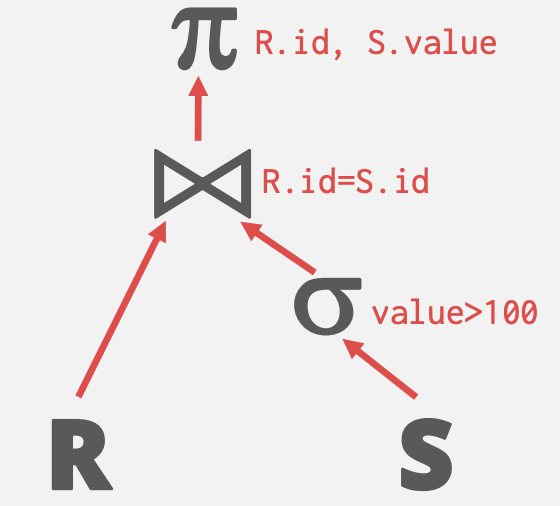

图文介绍 SQL 的三种查询计划处理模型

在 Hive 中使用 OpenCSVSerde

下面文章您可能感兴趣

但是由于某些原因我们可能需要删除这个外部表。遗憾的是,我们会遇到以下的异常:

hive> drop table order_info; FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:Table metadata not deleted since hdfs://iteblogcluster/user/iteblog_hadoop/order_info is not writable by iteblog)

这是因为 Hive 在删除表的时候会检查当前用户是否对数据目录(本例子为/user/iteblog_hadoop/order_info)有写权限,代码如下:

if (tbl.getSd().getLocation() != null) {

tblPath = new Path(tbl.getSd().getLocation());

if (!wh.isWritable(tblPath.getParent())) {

String target = indexName == null ? "Table" : "Index table";

throw new MetaException(target + " metadata not deleted since " +

tblPath.getParent() + " is not writable by " +

hiveConf.getUser());

}

}

一般外部共享给咱们的数据只有读权限,并没有写权限,所以上面的实现逻辑有问题。

解决办法大致有两种:

,如果当前表是外部表,则不检查当前用户是否对数据目录有写权限。实现如下:

diff --git a/metastore/src/java/org/apache/hadoop/hive/metastore/HiveMetaStore.java b/metastore/src/java/org/apache/hadoop/hive/metastore/HiveMetaStore.java

index 4520cd52539e26afebf9553ce411c9d4ee03cda0..1ae9b9771617637d8b8b9b16f7c5184d77e28d5a 100644

--- a/metastore/src/java/org/apache/hadoop/hive/metastore/HiveMetaStore.java

+++ b/metastore/src/java/org/apache/hadoop/hive/metastore/HiveMetaStore.java

@@ -1360,13 +1360,20 @@ private void drop_table_core(final RawStore ms, final String dbname, final Strin

throw new MetaException(e.getMessage());

}

}

+

isExternal = isExternal(tbl);

if (tbl.getSd().getLocation() != null) {

tblPath = new Path(tbl.getSd().getLocation());

- if (!wh.isWritable(tblPath.getParent())) {

- throw new MetaException("Table metadata not deleted since " +

- tblPath.getParent() + " is not writable by " +

- hiveConf.getUser());

+

+ //Check that the user is owner of the table

+ if (!tbl.getOwner().equals(hiveConf.getUser())) {

+ throw new MetaException("Table metadata not deleted since user " + hiveConf.getUser()

+ + " is not the owner of table " + tbl.getTableName());

+ }

+ // if table is internal, check that table location is writable by the user

+ if (!wh.isWritable(tblPath.getParent())&&!isExternal) {

+ throw new MetaException("Table metadata not deleted since " + tblPath.getParent()

+ + " is not writable by " + hiveConf.getUser());

}

}

上面的代码是摘自HIVE-9020,详情请大家自己进去看。

这种办法就是利用 Hive 提供的功能,修改当前外部表(order_info)的数据目录到一个可以写的数据目录下,然后再删除外部表,如下:

hive> alter table order_info set location 'hdfs://iteblogcluster/user/iteblog/tmp/'; hive> drop table order_info; OK Time taken: 0.254 seconds

然后我们再删除 order_info 表,这时候就没问题了。

如果外部表带有分区,删除每个分区的时候可能也会报上面的错误,这时候也需要我们修改每个分区的数据目录到一个可写的目录下就可以删除当前分区。

ALTER TABLE order_info PARTITION (dt='2017-06-27') SET LOCATION "new location";本博客文章除特别声明,全部都是原创!

lz,什么时候能写一篇hive新特性-llap的踩坑教程?谢谢