1. Basic Operations on Delta Lakes

What is Delta Lake?

How to start using Delta Lake

Using Delta Lake via local Spark shells

Leveraging GitHub or Maven

Using Databricks Community Edition

Basic operations

Creating your first Delta table

Unpacking the Transaction Log

What Is the Delta Lake Transaction Log?

How Does the Transaction Log Work?

Dealing With Multiple Concurrent Reads and Writes

Other Use Cases

Diving further into the transaction log

Table Utilities

Review table history

Vacuum History

Retrieve Delta table details

Generate a manifest file

Convert a Parquet table to a Delta table

Convert a Delta table to a Parquet table

Restore a table version

Summary

2. Time Travel with Delta Lake

Introduction

Under the hood of a Delta Table

The Delta Directory

Delta Logs Directory

The files of a Delta table

Time Travel

Common Challenges with Changing Data

Working with Time Travel

Time travel use cases

Time travel considerations

Summary

3. Continuous Applications with Delta Lake

Make All Your Streams Come True

Spark Streaming Was Built to Unify Batch and Streaming

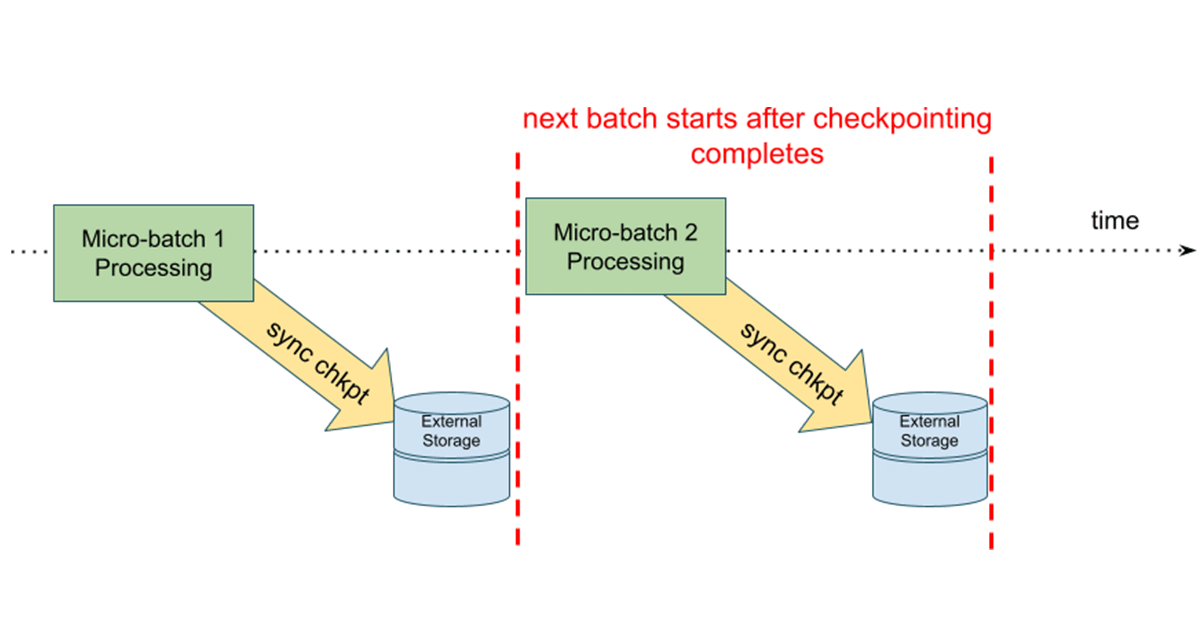

Exactly-Once Semantics

Putting Some Structure Around Streaming

Streaming with Delta

Delta as a Stream Source

Ignore Updates and Deletes

Delta Table as a Sink

Appendix

本博客文章除特别声明,全部都是原创!原创文章版权归过往记忆大数据(过往记忆)所有,未经许可不得转载。

本文链接: 【Delta Lake: The Definitive Guide 预览版下载】(https://www.iteblog.com/archives/9970.html)